GNU Scientific Library

Reference Manual

Edition 1.0, for GSL Version 1.0

1 November 2001

Mark Galassi

Jim Davies

James Theiler

Brian Gough

Gerard Jungman

Michael Booth

Fabrice Rossi

@raggedbottom

@dircategory Scientific software

* gsl-ref: (gsl-ref). GNU Scientific Library -- Reference

Los Alamos National Laboratory

Department of Computer Science, Georgia Institute of Technology

Astrophysics and Radiation Measurements Group, Los Alamos National Laboratory

Network Theory Limited

Theoretical Fluid Dynamics Group, Los Alamos National Laboratory

Department of Physics and Astronomy, The Johns Hopkins University

University of Paris-Dauphine

Copyright (C) 1996, 1997, 1998, 1999, 2000, 2001 The GSL Team.

Permission is granted to copy, distribute and/or modify this document

under the terms of the GNU Free Documentation License, Version 1.1

or any later version published by the Free Software Foundation;

with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts.

A copy of the license is included in the section entitled "GNU

Free Documentation License".

The GNU Scientific Library (GSL) is a collection of routines for

numerical computing. The routines have been written from scratch in C,

and are meant to present a modern Applications Programming Interface

(API) for C programmers, while allowing wrappers to be written for very

high level languages. The source code is distributed under the GNU

General Public License.

The library covers a wide range of topics in numerical computing.

Routines are available for the following areas,

| Complex Numbers | Roots of Polynomials |

| Special Functions | Vectors and Matrices |

| Permutations | Sorting |

| BLAS Support | Linear Algebra |

| Eigensystems | Fast Fourier Transforms |

| Quadrature | Random Numbers |

| Quasi-Random Sequences | Random Distributions |

| Statistics | Histograms |

| N-Tuples | Monte Carlo Integration |

| Simulated Annealing | Differential Equations |

| Interpolation | Numerical Differentiation |

| Chebyshev Approximations | Series Acceleration |

| Discrete Hankel Transforms | Root-Finding |

| Minimization | Least-Squares Fitting |

| Physical Constants | IEEE Floating-Point |

The use of these routines is described in this manual. Each chapter

provides detailed definitions of the functions, followed by example

programs and references to the articles on which the algorithms are

based.

The subroutines in the GNU Scientific Library are "free software";

this means that everyone is free to use them, and to redistribute them

in other free programs. The library is not in the public domain; it is

copyrighted and there are conditions on its distribution. These

conditions are designed to permit everything that a good cooperating

citizen would want to do. What is not allowed is to try to prevent

others from further sharing any version of the software that they might

get from you.

Specifically, we want to make sure that you have the right to give away

copies of any programs related to the GNU Scientific Library, that you

receive their source code or else can get it if you want it, that you

can change these programs or use pieces of them in new free programs,

and that you know you can do these things. The library should not be

redistributed in proprietary programs.

To make sure that everyone has such rights, we have to forbid you to

deprive anyone else of these rights. For example, if you distribute

copies of any related code, you must give the recipients all the rights

that you have. You must make sure that they, too, receive or can get

the source code. And you must tell them their rights.

Also, for our own protection, we must make certain that everyone finds

out that there is no warranty for the GNU Scientific Library. If these

programs are modified by someone else and passed on, we want their

recipients to know that what they have is not what we distributed, so

that any problems introduced by others will not reflect on our

reputation.

The precise conditions for the distribution of software related to the

GNU Scientific Library are found in the GNU General Public License

(see section GNU General Public License). Further information about this

license is available from the GNU Project webpage Frequently Asked

Questions about the GNU GPL,

The source code for the library can be obtained in different ways, by

copying it from a friend, purchasing it on CDROM or downloading it

from the internet. A list of public ftp servers which carry the source

code can be found on the development website,

The preferred platform for the library is a GNU system, which

allows it to take advantage of additional features. The library is

portable and compiles on most Unix platforms. It is also available for

Microsoft Windows. Precompiled versions of the library can be purchased

from commercial redistributors listed on the website.

Announcements of new releases, updates and other relevant events are

made on the gsl-announce mailing list. To subscribe to this

low-volume list, send an email of the following form,

To: gsl-announce-request@sources.redhat.com

Subject: subscribe

You will receive a response asking to you to reply in order to confirm

your subscription.

The following short program demonstrates the use of the library by

computing the value of the Bessel function J_0(x) for x=5,

#include <stdio.h>

#include <gsl/gsl_sf_bessel.h>

int

main (void)

{

double x = 5.0;

double y = gsl_sf_bessel_J0 (x);

printf("J0(%g) = %.18e\n", x, y);

return 0;

}

The output is shown below, and should be correct to double-precision

accuracy,

J0(5) = -1.775967713143382920e-01

The steps needed to compile programs which use the library are described

in the next chapter.

The software described in this manual has no warranty, it is provided

"as is". It is your responsibility to validate the behavior of the

routines and their accuracy using the source code provided. Consult the

GNU General Public license for further details (see section GNU General Public License).

Additional information, including online copies of this manual, links to

related projects, and mailing list archives are available from the

development website mentioned above. The developers of the library can

be reached via the project's public mailing list,

gsl-discuss@sources.redhat.com

This mailing list can be used to report bugs or to ask questions not

covered by this manual.

This chapter describes how to compile programs that use GSL, and

introduces its conventions.

The library is written in ANSI C and is intended to conform to the ANSI

C standard. It should be portable to any system with a working ANSI C

compiler.

The library does not rely on any non-ANSI extensions in the interface it

exports to the user. Programs you write using GSL can be ANSI

compliant. Extensions which can be used in a way compatible with pure

ANSI C are supported, however, via conditional compilation. This allows

the library to take advantage of compiler extensions on those platforms

which support them.

When an ANSI C feature is known to be broken on a particular system the

library will exclude any related functions at compile-time. This should

make it impossible to link a program that would use these functions and

give incorrect results.

To avoid namespace conflicts all exported function names and variables

have the prefix gsl_, while exported macros have the prefix

GSL_.

The library header files are installed in their own `gsl'

directory. You should write any preprocessor include statements with a

`gsl/' directory prefix thus,

#include <gsl/gsl_math.h>

If the directory is not installed on the standard search path of your

compiler you will also need to provide its location to the preprocessor

as a command line flag. The default location of the `gsl'

directory is `/usr/local/include/gsl'.

The library is installed as a single file, `libgsl.a'. A shared

version of the library is also installed on systems that support shared

libraries. The default location of these files is

`/usr/local/lib'. To link against the library you need to specify

both the main library and a supporting CBLAS library, which

provides standard basic linear algebra subroutines. A suitable

CBLAS implementation is provided in the library

`libgslcblas.a' if your system does not provide one. The following

example shows how to link an application with the library,

gcc app.o -lgsl -lgslcblas -lm

The following command line shows how you would link the same application

with an alternative blas library called `libcblas',

gcc app.o -lgsl -lcblas -lm

For the best performance an optimized platform-specific CBLAS

library should be used for -lcblas. The library must conform to

the CBLAS standard. The ATLAS package provides a portable

high-performance BLAS library with a CBLAS interface. It is

free software and should be installed for any work requiring fast vector

and matrix operations. The following command line will link with the

ATLAS library and its CBLAS interface,

gcc app.o -lgsl -lcblas -latlas -lm

For more information see section BLAS Support.

The program gsl-config provides information on the local version

of the library. For example, the following command shows that the

library has been installed under the directory `/usr/local',

bash$ gsl-config --prefix

/usr/local

Further information is available using the command gsl-config --help.

To run a program linked with the shared version of the library it may be

necessary to define the shell variable LD_LIBRARY_PATH to include

the directory where the library is installed. For example,

LD_LIBRARY_PATH=/usr/local/lib:$LD_LIBRARY_PATH ./app

To compile a statically linked version of the program instead, use the

-static flag in gcc,

gcc -static app.o -lgsl -lgslcblas -lm

For applications using autoconf the standard macro

AC_CHECK_LIB can be used to link with the library automatically

from a configure script. The library itself depends on the

presence of a CBLAS and math library as well, so these must also be

located before linking with the main libgsl file. The following

commands should be placed in the `configure.in' file to perform

these tests,

AC_CHECK_LIB(m,main)

AC_CHECK_LIB(gslcblas,main)

AC_CHECK_LIB(gsl,main)

Assuming the libraries are found the output during the configure stage

looks like this,

checking for main in -lm... yes

checking for main in -lgslcblas... yes

checking for main in -lgsl... yes

If the library is found then the tests will define the macros

HAVE_LIBGSL, HAVE_LIBGSLCBLAS, HAVE_LIBM and add

the options -lgsl -lgslcblas -lm to the variable LIBS.

The tests above will find any version of the library. They are suitable

for general use, where the versions of the functions are not important.

An alternative macro is available in the file `gsl.m4' to test for

a specific version of the library. To use this macro simply add the

following line to your `configure.in' file instead of the tests

above:

AM_PATH_GSL(GSL_VERSION,

[action-if-found],

[action-if-not-found])

The argument GSL_VERSION should be the two or three digit

MAJOR.MINOR or MAJOR.MINOR.MICRO version number of the release

you require. A suitable choice for action-if-not-found is,

AC_MSG_ERROR(could not find required version of GSL)

Then you can add the variables GSL_LIBS and GSL_CFLAGS to

your Makefile.am files to obtain the correct compiler flags.

GSL_LIBS is equal to the output of the gsl-config --libs

command and GSL_CFLAGS is equal to gsl-config --cflags

command. For example,

libgsdv_la_LDFLAGS = \

$(GTK_LIBDIR) \

$(GTK_LIBS) -lgsdvgsl $(GSL_LIBS) -lgslcblas

Note that the macro AM_PATH_GSL needs to use the C compiler so it

should appear in the `configure.in' file before the macro

AC_LANG_CPLUSPLUS for programs that use C++.

The inline keyword is not part of ANSI C and the library does not

export any inline function definitions by default. However, the library

provides optional inline versions of performance-critical functions by

conditional compilation. The inline versions of these functions can be

included by defining the macro HAVE_INLINE when compiling an

application.

gcc -c -DHAVE_INLINE app.c

If you use autoconf this macro can be defined automatically.

The following test should be placed in your `configure.in' file,

AC_C_INLINE

if test "$ac_cv_c_inline" != no ; then

AC_DEFINE(HAVE_INLINE,1)

AC_SUBST(HAVE_INLINE)

fi

and the macro will then be defined in the compilation flags or by

including the file `config.h' before any library headers. If you

do not define the macro HAVE_INLINE then the slower non-inlined

versions of the functions will be used instead.

Note that the actual usage of the inline keyword is extern

inline, which eliminates unnecessary function definitions in GCC.

If the form extern inline causes problems with other compilers a

stricter autoconf test can be used, see section Autoconf Macros.

The extended numerical type long double is part of the ANSI C

standard and should be available in every modern compiler. However, the

precision of long double is platform dependent, and this should

be considered when using it. The IEEE standard only specifies the

minimum precision of extended precision numbers, while the precision of

double is the same on all platforms.

In some system libraries the stdio.h formatted input/output

functions printf and scanf are not implemented correctly

for long double. Undefined or incorrect results are avoided by

testing these functions during the configure stage of library

compilation and eliminating certain GSL functions which depend on them

if necessary. The corresponding line in the configure output

looks like this,

checking whether printf works with long double... no

Consequently when long double formatted input/output does not

work on a given system it should be impossible to link a program which

uses GSL functions dependent on this.

If it is necessary to work on a system which does not support formatted

long double input/output then the options are to use binary

formats or to convert long double results into double for

reading and writing.

To help in writing portable applications GSL provides some

implementations of functions that are found in other libraries, such as

the BSD math library. You can write your application to use the native

versions of these functions, and substitute the GSL versions via a

preprocessor macro if they are unavailable on another platform. The

substitution can be made automatically if you use autoconf. For

example, to test whether the BSD function hypot is available you

can include the following line in the configure file `configure.in'

for your application,

AC_CHECK_FUNCS(hypot)

and place the following macro definitions in the file

`config.h.in',

/* Substitute gsl_hypot for missing system hypot */

#ifndef HAVE_HYPOT

#define hypot gsl_hypot

#endif

The application source files can then use the include command

#include <config.h> to substitute gsl_hypot for each

occurrence of hypot when hypot is not available.

In most circumstances the best strategy is to use the native versions of

these functions when available, and fall back to GSL versions otherwise,

since this allows your application to take advantage of any

platform-specific optimizations in the system library. This is the

strategy used within GSL itself.

The main implementation of some functions in the library will not be

optimal on all architectures. For example, there are several ways to

compute a Gaussian random variate and their relative speeds are

platform-dependent. In cases like this the library provides alternate

implementations of these functions with the same interface. If you

write your application using calls to the standard implementation you

can select an alternative version later via a preprocessor definition.

It is also possible to introduce your own optimized functions this way

while retaining portability. The following lines demonstrate the use of

a platform-dependent choice of methods for sampling from the Gaussian

distribution,

#ifdef SPARC

#define gsl_ran_gaussian gsl_ran_gaussian_ratio_method

#endif

#ifdef INTEL

#define gsl_ran_gaussian my_gaussian

#endif

These lines would be placed in the configuration header file

`config.h' of the application, which should then be included by all

the source files. Note that the alternative implementations will not

produce bit-for-bit identical results, and in the case of random number

distributions will produce an entirely different stream of random

variates.

Many functions in the library are defined for different numeric types.

This feature is implemented by varying the name of the function with a

type-related modifier -- a primitive form of C++ templates. The

modifier is inserted into the function name after the initial module

prefix. The following table shows the function names defined for all

the numeric types of an imaginary module gsl_foo with function

fn,

gsl_foo_fn double

gsl_foo_long_double_fn long double

gsl_foo_float_fn float

gsl_foo_long_fn long

gsl_foo_ulong_fn unsigned long

gsl_foo_int_fn int

gsl_foo_uint_fn unsigned int

gsl_foo_short_fn short

gsl_foo_ushort_fn unsigned short

gsl_foo_char_fn char

gsl_foo_uchar_fn unsigned char

The normal numeric precision double is considered the default and

does not require a suffix. For example, the function

gsl_stats_mean computes the mean of double precision numbers,

while the function gsl_stats_int_mean computes the mean of

integers.

A corresponding scheme is used for library defined types, such as

gsl_vector and gsl_matrix. In this case the modifier is

appended to the type name. For example, if a module defines a new

type-dependent struct or typedef gsl_foo it is modified for other

types in the following way,

gsl_foo double

gsl_foo_long_double long double

gsl_foo_float float

gsl_foo_long long

gsl_foo_ulong unsigned long

gsl_foo_int int

gsl_foo_uint unsigned int

gsl_foo_short short

gsl_foo_ushort unsigned short

gsl_foo_char char

gsl_foo_uchar unsigned char

When a module contains type-dependent definitions the library provides

individual header files for each type. The filenames are modified as

shown in the below. For convenience the default header includes the

definitions for all the types. To include only the double precision

header, or any other specific type, file use its individual filename.

#include <gsl/gsl_foo.h> All types

#include <gsl/gsl_foo_double.h> double

#include <gsl/gsl_foo_long_double.h> long double

#include <gsl/gsl_foo_float.h> float

#include <gsl/gsl_foo_long.h> long

#include <gsl/gsl_foo_ulong.h> unsigned long

#include <gsl/gsl_foo_int.h> int

#include <gsl/gsl_foo_uint.h> unsigned int

#include <gsl/gsl_foo_short.h> short

#include <gsl/gsl_foo_ushort.h> unsigned short

#include <gsl/gsl_foo_char.h> char

#include <gsl/gsl_foo_uchar.h> unsigned char

The library header files automatically define functions to have

extern "C" linkage when included in C++ programs.

The library assumes that arrays, vectors and matrices passed as

modifiable arguments are not aliased and do not overlap with each other.

This removes the need for the library to handle overlapping memory

regions as a special case, and allows additional optimizations to be

used. If overlapping memory regions are passed as modifiable arguments

then the results of such functions will be undefined. If the arguments

will not be modified (for example, if a function prototype declares them

as const arguments) then overlapping or aliased memory regions

can be safely used.

Where possible the routines in the library have been written to avoid

dependencies between modules and files. This should make it possible to

extract individual functions for use in your own applications, without

needing to have the whole library installed. You may need to define

certain macros such as GSL_ERROR and remove some #include

statements in order to compile the files as standalone units. Reuse of

the library code in this way is encouraged, subject to the terms of the

GNU General Public License.

This chapter describes the way that GSL functions report and handle

errors. By examining the status information returned by every function

you can determine whether it succeeded or failed, and if it failed you

can find out what the precise cause of failure was. You can also define

your own error handling functions to modify the default behavior of the

library.

The functions described in this section are declared in the header file

`gsl_errno.h'.

The library follows the thread-safe error reporting conventions of the

POSIX Threads library. Functions return a non-zero error code to

indicate an error and 0 to indicate success.

int status = gsl_function(...)

if (status) { /* an error occurred */

.....

/* status value specifies the type of error */

}

The routines report an error whenever they cannot perform the task

requested of them. For example, a root-finding function would return a

non-zero error code if could not converge to the requested accuracy, or

exceeded a limit on the number of iterations. Situations like this are

a normal occurrence when using any mathematical library and you should

check the return status of the functions that you call.

Whenever a routine reports an error the return value specifies the

type of error. The return value is analogous to the value of the

variable errno in the C library. However, the C library's

errno is a global variable, which is not thread-safe (There can

be only one instance of a global variable per program. Different

threads of execution may overwrite errno simultaneously).

Returning the error number directly avoids this problem. The caller can

examine the return code and decide what action to take, including

ignoring the error if it is not considered serious.

The error code numbers are defined in the file `gsl_errno.h'. They

all have the prefix GSL_ and expand to non-zero constant integer

values. Many of the error codes use the same base name as a

corresponding error code in C library. Here are some of the most common

error codes,

- Macro: int GSL_EDOM

-

Domain error; used by mathematical functions when an argument value does

not fall into the domain over which the function is defined (like

EDOM in the C library)

- Macro: int GSL_ERANGE

-

Range error; used by mathematical functions when the result value is not

representable because of overflow or underflow (like ERANGE in the C

library)

- Macro: int GSL_ENOMEM

-

No memory available. The system cannot allocate more virtual memory

because its capacity is full (like ENOMEM in the C library). This error

is reported when a GSL routine encounters problems when trying to

allocate memory with

malloc.

- Macro: int GSL_EINVAL

-

Invalid argument. This is used to indicate various kinds of problems

with passing the wrong argument to a library function (like EINVAL in the C

library).

Here is an example of some code which checks the return value of a

function where an error might be reported,

int status = gsl_fft_complex_radix2_forward (data, n);

if (status) {

if (status == GSL_EINVAL) {

fprintf (stderr, "invalid argument, n=%d\n", n);

} else {

fprintf (stderr, "failed, gsl_errno=%d\n",

status);

}

exit (-1);

}

The function gsl_fft_complex_radix2 only accepts integer lengths

which are a power of two. If the variable n is not a power

of two then the call to the library function will return

GSL_EINVAL, indicating that the length argument is invalid. The

else clause catches any other possible errors.

The error codes can be converted into an error message using the

function gsl_strerror.

- Function: const char * gsl_strerror (const int gsl_errno)

-

This function returns a pointer to a string describing the error code

gsl_errno. For example,

printf("error: %s\n", gsl_strerror (status));

would print an error message like error: output range error for a

status value of GSL_ERANGE.

In addition to reporting errors the library also provides an optional

error handler. The error handler is called by library functions when

they report an error, just before they return to the caller. The

purpose of the handler is to provide a function where a breakpoint can

be set that will catch library errors when running under the debugger.

It is not intended for use in production programs, which should handle

any errors using the error return codes described above.

The default behavior of the error handler is to print a short message

and call abort() whenever an error is reported by the library.

If this default is not turned off then any program using the library

will stop with a core-dump whenever a library routine reports an error.

This is intended as a fail-safe default for programs which do not check

the return status of library routines (we don't encourage you to write

programs this way). If you turn off the default error handler it is

your responsibility to check the return values of the GSL routines. You

can customize the error behavior by providing a new error handler. For

example, an alternative error handler could log all errors to a file,

ignore certain error conditions (such as underflows), or start the

debugger and attach it to the current process when an error occurs.

All GSL error handlers have the type gsl_error_handler_t, which is

defined in `gsl_errno.h',

- Data Type: gsl_error_handler_t

-

This is the type of GSL error handler functions. An error handler will

be passed four arguments which specify the reason for the error (a

string), the name of the source file in which it occurred (also a

string), the line number in that file (an integer) and the error number

(an integer). The source file and line number are set at compile time

using the __FILE__ and __LINE__ directives in the

preprocessor. An error handler function returns type void.

Error handler functions should be defined like this,

void handler (const char * reason,

const char * file,

int line,

int gsl_errno)

To request the use of your own error handler you need to call the

function gsl_set_error_handler which is also declared in

`gsl_errno.h',

- Function: gsl_error_handler_t gsl_set_error_handler (gsl_error_handler_t new_handler)

-

This functions sets a new error handler, new_handler, for the GSL

library routines. The previous handler is returned (so that you can

restore it later). Note that the pointer to a user defined error

handler function is stored in a static variable, so there can only be

one error handler per program. This function should be not be used in

multi-threaded programs except to set up a program-wide error handler

from a master thread. The following example shows how to set and

restore a new error handler,

/* save original handler, install new handler */

old_handler = gsl_set_error_handler (&my_handler);

/* code uses new handler */

.....

/* restore original handler */

gsl_set_error_handler (old_handler);

To use the default behavior (abort on error) set the error

handler to NULL,

old_handler = gsl_set_error_handler (NULL);

- Function: gsl_error_handler_t gsl_set_error_handler_off ()

-

This function turns off the error handler by defining an error handler

which does nothing. This will cause the program to continue after any

error, so the return values from any library routines must be checked.

This is the recommended behavior for production programs. The previous

handler is returned (so that you can restore it later).

The error behavior can be changed for specific applications by

recompiling the library with a customized definition of the

GSL_ERROR macro in the file `gsl_errno.h'.

If you are writing numerical functions in a program which also uses GSL

code you may find it convenient to adopt the same error reporting

conventions as in the library.

To report an error you need to call the function gsl_error with a

string describing the error and then return an appropriate error code

from gsl_errno.h, or a special value, such as NaN. For

convenience the file `gsl_errno.h' defines two macros which carry

out these steps:

- Macro: GSL_ERROR (reason, gsl_errno)

-

This macro reports an error using the GSL conventions and returns a

status value of gsl_errno. It expands to the following code fragment,

gsl_error (reason, __FILE__, __LINE__, gsl_errno);

return gsl_errno;

The macro definition in `gsl_errno.h' actually wraps the code

in a do { ... } while (0) block to prevent possible

parsing problems.

Here is an example of how the macro could be used to report that a

routine did not achieve a requested tolerance. To report the error the

routine needs to return the error code GSL_ETOL.

if (residual > tolerance)

{

GSL_ERROR("residual exceeds tolerance", GSL_ETOL);

}

- Macro: GSL_ERROR_VAL (reason, gsl_errno, value)

-

This macro is the same as GSL_ERROR but returns a user-defined

status value of value instead of an error code. It can be used for

mathematical functions that return a floating point value.

Here is an example where a function needs to return a NaN because

of a mathematical singularity,

if (x == 0)

{

GSL_ERROR_VAL("argument lies on singularity",

GSL_ERANGE, GSL_NAN);

}

This chapter describes basic mathematical functions. Some of these

functions are present in system libraries, but the alternative versions

given here can be used as a substitute when the system functions are not

available.

The functions and macros described in this chapter are defined in the

header file `gsl_math.h'.

The library ensures that the standard BSD mathematical constants

are defined. For reference here is a list of the constants.

M_E

-

The base of exponentials, e

M_LOG2E

-

The base-2 logarithm of e, \log_2 (e)

M_LOG10E

-

The base-10 logarithm of e, @c{$\log_{10}(e)$}

\log_10 (e)

M_SQRT2

-

The square root of two, \sqrt 2

M_SQRT1_2

-

The square root of one-half, @c{$\sqrt{1/2}$}

\sqrt{1/2}

M_SQRT3

-

The square root of three, \sqrt 3

M_PI

-

The constant pi, \pi

M_PI_2

-

Pi divided by two, \pi/2

M_PI_4

-

Pi divided by four, \pi/4

M_SQRTPI

-

The square root of pi, \sqrt\pi

M_2_SQRTPI

-

Two divided by the square root of pi, 2/\sqrt\pi

M_1_PI

-

The reciprocal of pi, 1/\pi

M_2_PI

-

Twice the reciprocal of pi, 2/\pi

M_LN10

-

The natural logarithm of ten, \ln(10)

M_LN2

-

The natural logarithm of two, \ln(2)

M_LNPI

-

The natural logarithm of pi, \ln(\pi)

M_EULER

-

Euler's constant, \gamma

- Macro: GSL_POSINF

-

This macro contains the IEEE representation of positive infinity,

+\infty. It is computed from the expression

+1.0/0.0.

- Macro: GSL_NEGINF

-

This macro contains the IEEE representation of negative infinity,

-\infty. It is computed from the expression

-1.0/0.0.

- Macro: GSL_NAN

-

This macro contains the IEEE representation of the Not-a-Number symbol,

NaN. It is computed from the ratio 0.0/0.0.

- Function: int gsl_isnan (const double x)

-

This function returns 1 if x is not-a-number.

- Function: int gsl_isinf (const double x)

-

This function returns +1 if x is positive infinity,

-1 if x is negative infinity and 0 otherwise.

- Function: int gsl_finite (const double x)

-

This function returns 1 if x is a real number, and 0 if it is

infinite or not-a-number.

The following routines provide portable implementations of functions

found in the BSD math library. When native versions are not available

the functions described here can be used instead. The substitution can

be made automatically if you use autoconf to compile your

application (see section Portability functions).

- Function: double gsl_log1p (const double x)

-

This function computes the value of \log(1+x) in a way that is

accurate for small x. It provides an alternative to the BSD math

function

log1p(x).

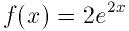

- Function: double gsl_expm1 (const double x)

-

This function computes the value of \exp(x)-1 in a way that is

accurate for small x. It provides an alternative to the BSD math

function

expm1(x).

- Function: double gsl_hypot (const double x, const double y)

-

This function computes the value of

\sqrt{x^2 + y^2} in a way that avoids overflow. It provides an

alternative to the BSD math function

hypot(x,y).

- Function: double gsl_acosh (const double x)

-

This function computes the value of \arccosh(x). It provides an

alternative to the standard math function

acosh(x).

- Function: double gsl_asinh (const double x)

-

This function computes the value of \arcsinh(x). It provides an

alternative to the standard math function

asinh(x).

- Function: double gsl_atanh (const double x)

-

This function computes the value of \arctanh(x). It provides an

alternative to the standard math function

atanh(x).

A common complaint about the standard C library is its lack of a

function for calculating (small) integer powers. GSL provides a simple

functions to fill this gap. For reasons of efficiency, these functions

do not check for overflow or underflow conditions.

- Function: double gsl_pow_int (double x, int n)

-

This routine computes the power x^n for integer n. The

power is computed using the minimum number of multiplications. For

example, x^8 is computed as ((x^2)^2)^2, requiring only 3

multiplications. A version of this function which also computes the

numerical error in the result is available as

gsl_sf_pow_int_e.

- Function: double gsl_pow_2 (const double x)

-

- Function: double gsl_pow_3 (const double x)

-

- Function: double gsl_pow_4 (const double x)

-

- Function: double gsl_pow_5 (const double x)

-

- Function: double gsl_pow_6 (const double x)

-

- Function: double gsl_pow_7 (const double x)

-

- Function: double gsl_pow_8 (const double x)

-

- Function: double gsl_pow_9 (const double x)

-

These functions can be used to compute small integer powers x^2,

x^3, etc. efficiently. The functions will be inlined when

possible so that use of these functions should be as efficient as

explicitly writing the corresponding product expression.

#include <gsl/gsl_math.h>

double y = gsl_pow_4 (3.141) /* compute 3.141**4 */

- Macro: GSL_SIGN (x)

-

This macro returns the sign of x. It is defined as

((x) >= 0

? 1 : -1). Note that with this definition the sign of zero is positive

(regardless of its IEEE sign bit).

- Macro: GSL_IS_ODD (n)

-

This macro evaluates to 1 if n is odd and 0 if n is

even. The argument n must be of integer type.

- Macro: GSL_IS_EVEN (n)

-

This macro is the opposite of

GSL_IS_ODD(n). It evaluates to 1 if

n is even and 0 if n is odd. The argument n must be of

integer type.

- Macro: GSL_MAX (a, b)

-

This macro returns the maximum of a and b. It is defined as

((a) > (b) ? (a):(b)).

- Macro: GSL_MIN (a, b)

-

This macro returns the minimum of a and b. It is defined as

((a) < (b) ? (a):(b)).

- Function: extern inline double GSL_MAX_DBL (double a, double b)

-

This function returns the maximum of the double precision numbers

a and b using an inline function. The use of a function

allows for type checking of the arguments as an extra safety feature. On

platforms where inline functions are not available the macro

GSL_MAX will be automatically substituted.

- Function: extern inline double GSL_MIN_DBL (double a, double b)

-

This function returns the minimum of the double precision numbers

a and b using an inline function. The use of a function

allows for type checking of the arguments as an extra safety feature. On

platforms where inline functions are not available the macro

GSL_MIN will be automatically substituted.

- Function: extern inline int GSL_MAX_INT (int a, int b)

-

- Function: extern inline int GSL_MIN_INT (int a, int b)

-

These functions return the maximum or minimum of the integers a

and b using an inline function. On platforms where inline

functions are not available the macros

GSL_MAX or GSL_MIN

will be automatically substituted.

- Function: extern inline long double GSL_MAX_LDBL (long double a, long double b)

-

- Function: extern inline long double GSL_MIN_LDBL (long double a, long double b)

-

These functions return the maximum or minimum of the long doubles a

and b using an inline function. On platforms where inline

functions are not available the macros

GSL_MAX or GSL_MIN

will be automatically substituted.

The functions described in this chapter provide support for complex

numbers. The algorithms take care to avoid unnecessary intermediate

underflows and overflows, allowing the functions to evaluated over the

as much of the complex plane as possible.

For multiple-valued functions the branch cuts have been chosen to follow

the conventions of Abramowitz and Stegun in the Handbook of

Mathematical Functions. The functions return principal values which are

the same as those in GNU Calc, which in turn are the same as those in

Common Lisp, The Language (Second Edition) (n.b. The second

edition uses different definitions from the first edition) and the

HP-28/48 series of calculators.

The complex types are defined in the header file `gsl_complex.h',

while the corresponding complex functions and arithmetic operations are

defined in `gsl_complex_math.h'.

Complex numbers are represented using the type gsl_complex. The

internal representation of this type may vary across platforms and

should not be accessed directly. The functions and macros described

below allow complex numbers to be manipulated in a portable way.

For reference, the default form of the gsl_complex type is

given by the following struct,

typedef struct

{

double dat[2];

} gsl_complex;

The real and imaginary part are stored in contiguous elements of a two

element array. This eliminates any padding between the real and

imaginary parts, dat[0] and dat[1], allowing the struct to

be mapped correctly onto packed complex arrays.

- Function: gsl_complex gsl_complex_rect (double x, double y)

-

This function uses the rectangular cartesian components

(x,y) to return the complex number z = x + i y.

- Function: gsl_complex gsl_complex_polar (double r, double theta)

-

This function returns the complex number z = r \exp(i \theta) = r

(\cos(\theta) + i \sin(\theta)) from the polar representation

(r,theta).

- Macro: GSL_REAL (z)

-

- Macro: GSL_IMAG (z)

-

These macros return the real and imaginary parts of the complex number

z.

- Macro: GSL_SET_COMPLEX (zp, x, y)

-

This macro uses the cartesian components (x,y) to set the

real and imaginary parts of the complex number pointed to by zp.

For example,

GSL_SET_COMPLEX(&z, 3, 4)

sets z to be 3 + 4i.

- Macro: GSL_SET_REAL (zp,x)

-

- Macro: GSL_SET_IMAG (zp,y)

-

These macros allow the real and imaginary parts of the complex number

pointed to by zp to be set independently.

- Function: double gsl_complex_arg (gsl_complex z)

-

This function returns the argument of the complex number z,

\arg(z), where @c{$-\pi < \arg(z) \leq \pi$}

-\pi < \arg(z) <= \pi.

- Function: double gsl_complex_abs (gsl_complex z)

-

This function returns the magnitude of the complex number z, |z|.

- Function: double gsl_complex_abs2 (gsl_complex z)

-

This function returns the squared magnitude of the complex number

z, |z|^2.

- Function: double gsl_complex_logabs (gsl_complex z)

-

This function returns the natural logarithm of the magnitude of the

complex number z, \log|z|. It allows an accurate

evaluation of \log|z| when |z| is close to one. The direct

evaluation of

log(gsl_complex_abs(z)) would lead to a loss of

precision in this case.

- Function: gsl_complex gsl_complex_add (gsl_complex a, gsl_complex b)

-

This function returns the sum of the complex numbers a and

b, z=a+b.

- Function: gsl_complex gsl_complex_sub (gsl_complex a, gsl_complex b)

-

This function returns the difference of the complex numbers a and

b, z=a-b.

- Function: gsl_complex gsl_complex_mul (gsl_complex a, gsl_complex b)

-

This function returns the product of the complex numbers a and

b, z=ab.

- Function: gsl_complex gsl_complex_div (gsl_complex a, gsl_complex b)

-

This function returns the quotient of the complex numbers a and

b, z=a/b.

- Function: gsl_complex gsl_complex_add_real (gsl_complex a, double x)

-

This function returns the sum of the complex number a and the

real number x, z=a+x.

- Function: gsl_complex gsl_complex_sub_real (gsl_complex a, double x)

-

This function returns the difference of the complex number a and the

real number x, z=a-x.

- Function: gsl_complex gsl_complex_mul_real (gsl_complex a, double x)

-

This function returns the product of the complex number a and the

real number x, z=ax.

- Function: gsl_complex gsl_complex_div_real (gsl_complex a, double x)

-

This function returns the quotient of the complex number a and the

real number x, z=a/x.

- Function: gsl_complex gsl_complex_add_imag (gsl_complex a, double y)

-

This function returns the sum of the complex number a and the

imaginary number iy, z=a+iy.

- Function: gsl_complex gsl_complex_sub_imag (gsl_complex a, double y)

-

This function returns the difference of the complex number a and the

imaginary number iy, z=a-iy.

- Function: gsl_complex gsl_complex_mul_imag (gsl_complex a, double y)

-

This function returns the product of the complex number a and the

imaginary number iy, z=a*(iy).

- Function: gsl_complex gsl_complex_div_imag (gsl_complex a, double y)

-

This function returns the quotient of the complex number a and the

imaginary number iy, z=a/(iy).

- Function: gsl_complex gsl_complex_conjugate (gsl_complex z)

-

This function returns the complex conjugate of the complex number

z, z^* = x - i y.

- Function: gsl_complex gsl_complex_inverse (gsl_complex z)

-

This function returns the inverse, or reciprocal, of the complex number

z, 1/z = (x - i y)/(x^2 + y^2).

- Function: gsl_complex gsl_complex_negative (gsl_complex z)

-

This function returns the negative of the complex number

z, -z = (-x) + i(-y).

- Function: gsl_complex gsl_complex_sqrt (gsl_complex z)

-

This function returns the square root of the complex number z,

\sqrt z. The branch cut is the negative real axis. The result

always lies in the right half of the complex plane.

- Function: gsl_complex gsl_complex_sqrt_real (double x)

-

This function returns the complex square root of the real number

x, where x may be negative.

- Function: gsl_complex gsl_complex_pow (gsl_complex z, gsl_complex a)

-

The function returns the complex number z raised to the complex

power a, z^a. This is computed as \exp(\log(z)*a)

using complex logarithms and complex exponentials.

- Function: gsl_complex gsl_complex_pow_real (gsl_complex z, double x)

-

This function returns the complex number z raised to the real

power x, z^x.

- Function: gsl_complex gsl_complex_exp (gsl_complex z)

-

This function returns the complex exponential of the complex number

z, \exp(z).

- Function: gsl_complex gsl_complex_log (gsl_complex z)

-

This function returns the complex natural logarithm (base e) of

the complex number z, \log(z). The branch cut is the

negative real axis.

- Function: gsl_complex gsl_complex_log10 (gsl_complex z)

-

This function returns the complex base-10 logarithm of

the complex number z, @c{$\log_{10}(z)$}

\log_10 (z).

- Function: gsl_complex gsl_complex_log_b (gsl_complex z, gsl_complex b)

-

This function returns the complex base-b logarithm of the complex

number z, \log_b(z). This quantity is computed as the ratio

\log(z)/\log(b).

- Function: gsl_complex gsl_complex_sin (gsl_complex z)

-

This function returns the complex sine of the complex number z,

\sin(z) = (\exp(iz) - \exp(-iz))/(2i).

- Function: gsl_complex gsl_complex_cos (gsl_complex z)

-

This function returns the complex cosine of the complex number z,

\cos(z) = (\exp(iz) + \exp(-iz))/2.

- Function: gsl_complex gsl_complex_tan (gsl_complex z)

-

This function returns the complex tangent of the complex number z,

\tan(z) = \sin(z)/\cos(z).

- Function: gsl_complex gsl_complex_sec (gsl_complex z)

-

This function returns the complex secant of the complex number z,

\sec(z) = 1/\cos(z).

- Function: gsl_complex gsl_complex_csc (gsl_complex z)

-

This function returns the complex cosecant of the complex number z,

\csc(z) = 1/\sin(z).

- Function: gsl_complex gsl_complex_cot (gsl_complex z)

-

This function returns the complex cotangent of the complex number z,

\cot(z) = 1/\tan(z).

- Function: gsl_complex gsl_complex_arcsin (gsl_complex z)

-

This function returns the complex arcsine of the complex number z,

\arcsin(z). The branch cuts are on the real axis, less than -1

and greater than 1.

- Function: gsl_complex gsl_complex_arcsin_real (double z)

-

This function returns the complex arcsine of the real number z,

\arcsin(z). For z between -1 and 1, the

function returns a real value in the range (-\pi,\pi]. For

z less than -1 the result has a real part of -\pi/2

and a positive imaginary part. For z greater than 1 the

result has a real part of \pi/2 and a negative imaginary part.

- Function: gsl_complex gsl_complex_arccos (gsl_complex z)

-

This function returns the complex arccosine of the complex number z,

\arccos(z). The branch cuts are on the real axis, less than -1

and greater than 1.

- Function: gsl_complex gsl_complex_arccos_real (double z)

-

This function returns the complex arccosine of the real number z,

\arccos(z). For z between -1 and 1, the

function returns a real value in the range [0,\pi]. For z

less than -1 the result has a real part of \pi/2 and a

negative imaginary part. For z greater than 1 the result

is purely imaginary and positive.

- Function: gsl_complex gsl_complex_arctan (gsl_complex z)

-

This function returns the complex arctangent of the complex number

z, \arctan(z). The branch cuts are on the imaginary axis,

below -i and above i.

- Function: gsl_complex gsl_complex_arcsec (gsl_complex z)

-

This function returns the complex arcsecant of the complex number z,

\arcsec(z) = \arccos(1/z).

- Function: gsl_complex gsl_complex_arcsec_real (double z)

-

This function returns the complex arcsecant of the real number z,

\arcsec(z) = \arccos(1/z).

- Function: gsl_complex gsl_complex_arccsc (gsl_complex z)

-

This function returns the complex arccosecant of the complex number z,

\arccsc(z) = \arcsin(1/z).

- Function: gsl_complex gsl_complex_arccsc_real (double z)

-

This function returns the complex arccosecant of the real number z,

\arccsc(z) = \arcsin(1/z).

- Function: gsl_complex gsl_complex_arccot (gsl_complex z)

-

This function returns the complex arccotangent of the complex number z,

\arccot(z) = \arctan(1/z).

- Function: gsl_complex gsl_complex_sinh (gsl_complex z)

-

This function returns the complex hyperbolic sine of the complex number

z, \sinh(z) = (\exp(z) - \exp(-z))/2.

- Function: gsl_complex gsl_complex_cosh (gsl_complex z)

-

This function returns the complex hyperbolic cosine of the complex number

z, \cosh(z) = (\exp(z) + \exp(-z))/2.

- Function: gsl_complex gsl_complex_tanh (gsl_complex z)

-

This function returns the complex hyperbolic tangent of the complex number

z, \tanh(z) = \sinh(z)/\cosh(z).

- Function: gsl_complex gsl_complex_sech (gsl_complex z)

-

This function returns the complex hyperbolic secant of the complex

number z, \sech(z) = 1/\cosh(z).

- Function: gsl_complex gsl_complex_csch (gsl_complex z)

-

This function returns the complex hyperbolic cosecant of the complex

number z, \csch(z) = 1/\sinh(z).

- Function: gsl_complex gsl_complex_coth (gsl_complex z)

-

This function returns the complex hyperbolic cotangent of the complex

number z, \coth(z) = 1/\tanh(z).

- Function: gsl_complex gsl_complex_arcsinh (gsl_complex z)

-

This function returns the complex hyperbolic arcsine of the

complex number z, \arcsinh(z). The branch cuts are on the

imaginary axis, below -i and above i.

- Function: gsl_complex gsl_complex_arccosh (gsl_complex z)

-

This function returns the complex hyperbolic arccosine of the complex

number z, \arccosh(z). The branch cut is on the real axis,

less than 1.

- Function: gsl_complex gsl_complex_arccosh_real (double z)

-

This function returns the complex hyperbolic arccosine of

the real number z, \arccosh(z).

- Function: gsl_complex gsl_complex_arctanh (gsl_complex z)

-

This function returns the complex hyperbolic arctangent of the complex

number z, \arctanh(z). The branch cuts are on the real

axis, less than -1 and greater than 1.

- Function: gsl_complex gsl_complex_arctanh_real (double z)

-

This function returns the complex hyperbolic arctangent of the real

number z, \arctanh(z).

- Function: gsl_complex gsl_complex_arcsech (gsl_complex z)

-

This function returns the complex hyperbolic arcsecant of the complex

number z, \arcsech(z) = \arccosh(1/z).

- Function: gsl_complex gsl_complex_arccsch (gsl_complex z)

-

This function returns the complex hyperbolic arccosecant of the complex

number z, \arccsch(z) = \arcsin(1/z).

- Function: gsl_complex gsl_complex_arccoth (gsl_complex z)

-

This function returns the complex hyperbolic arccotangent of the complex

number z, \arccoth(z) = \arctanh(1/z).

The implementations of the elementary and trigonometric functions are

based on the following papers,

-

T. E. Hull, Thomas F. Fairgrieve, Ping Tak Peter Tang,

"Implementing Complex Elementary Functions Using Exception

Handling", ACM Transactions on Mathematical Software, Volume 20

(1994), pp 215-244, Corrigenda, p553

-

T. E. Hull, Thomas F. Fairgrieve, Ping Tak Peter Tang,

"Implementing the complex arcsin and arccosine functions using exception

handling", ACM Transactions on Mathematical Software, Volume 23

(1997) pp 299-335

The general formulas and details of branch cuts can be found in the

following books,

-

Abramowitz and Stegun, Handbook of Mathematical Functions,

"Circular Functions in Terms of Real and Imaginary Parts", Formulas

4.3.55--58,

"Inverse Circular Functions in Terms of Real and Imaginary Parts",

Formulas 4.4.37--39,

"Hyperbolic Functions in Terms of Real and Imaginary Parts",

Formulas 4.5.49--52,

"Inverse Hyperbolic Functions -- relation to Inverse Circular Functions",

Formulas 4.6.14--19.

-

Dave Gillespie, Calc Manual, Free Software Foundation, ISBN

1-882114-18-3

This chapter describes functions for evaluating and solving polynomials.

There are routines for finding real and complex roots of quadratic and

cubic equations using analytic methods. An iterative polynomial solver

is also available for finding the roots of general polynomials with real

coefficients (of any order). The functions are declared in the header

file gsl_poly.h.

- Function: double gsl_poly_eval (const double c[], const int len, const double x)

-

This function evaluates the polynomial

c[0] + c[1] x + c[2] x^2 + \dots + c[len-1] x^{len-1} using

Horner's method for stability. The function is inlined when possible.

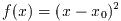

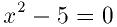

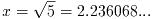

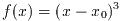

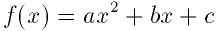

- Function: int gsl_poly_solve_quadratic (double a, double b, double c, double *x0, double *x1)

-

This function finds the real roots of the quadratic equation,

The number of real roots (either zero or two) is returned, and their

locations are stored in x0 and x1. If no real roots are

found then x0 and x1 are not modified. When two real roots

are found they are stored in x0 and x1 in ascending

order. The case of coincident roots is not considered special. For

example (x-1)^2=0 will have two roots, which happen to have

exactly equal values.

The number of roots found depends on the sign of the discriminant

b^2 - 4 a c. This will be subject to rounding and cancellation

errors when computed in double precision, and will also be subject to

errors if the coefficients of the polynomial are inexact. These errors

may cause a discrete change in the number of roots. However, for

polynomials with small integer coefficients the discriminant can always

be computed exactly.

- Function: int gsl_poly_complex_solve_quadratic (double a, double b, double c, gsl_complex *z0, gsl_complex *z1)

-

This function finds the complex roots of the quadratic equation,

The number of complex roots is returned (always two) and the locations

of the roots are stored in z0 and z1. The roots are returned

in ascending order, sorted first by their real components and then by

their imaginary components.

- Function: int gsl_poly_solve_cubic (double a, double b, double c, double *x0, double *x1, double *x2)

-

This function finds the real roots of the cubic equation,

with a leading coefficient of unity. The number of real roots (either

one or three) is returned, and their locations are stored in x0,

x1 and x2. If one real root is found then only x0 is

modified. When three real roots are found they are stored in x0,

x1 and x2 in ascending order. The case of coincident roots

is not considered special. For example, the equation (x-1)^3=0

will have three roots with exactly equal values.

- Function: int gsl_poly_complex_solve_cubic (double a, double b, double c, gsl_complex *z0, gsl_complex *z1, gsl_complex *z2)

-

This function finds the complex roots of the cubic equation,

The number of complex roots is returned (always three) and the locations

of the roots are stored in z0, z1 and z2. The roots

are returned in ascending order, sorted first by their real components

and then by their imaginary components.

The roots of polynomial equations cannot be found analytically beyond

the special cases of the quadratic, cubic and quartic equation. The

algorithm described in this section uses an iterative method to find the

approximate locations of roots of higher order polynomials.

- Function: gsl_poly_complex_workspace * gsl_poly_complex_workspace_alloc (size_t n)

-

This function allocates space for a

gsl_poly_complex_workspace

struct and a workspace suitable for solving a polynomial with n

coefficients using the routine gsl_poly_complex_solve.

The function returns a pointer to the newly allocated

gsl_poly_complex_workspace if no errors were detected, and a null

pointer in the case of error.

- Function: void gsl_poly_complex_workspace_free (gsl_poly_complex_workspace * w)

-

This function frees all the memory associated with the workspace

w.

- Function: int gsl_poly_complex_solve (const double * a, size_t n, gsl_poly_complex_workspace * w, gsl_complex_packed_ptr z)

-

This function computes the roots of the general polynomial

P(x) = a_0 + a_1 x + a_2 x^2 + ... + a_{n-1} x^{n-1} using

balanced-QR reduction of the companion matrix. The parameter n

specifies the length of the coefficient array. The coefficient of the

highest order term must be non-zero. The function requires a workspace

w of the appropriate size. The n-1 roots are returned in

the packed complex array z of length 2(n-1), alternating

real and imaginary parts.

The function returns GSL_SUCCESS if all the roots are found and

GSL_EFAILED if the QR reduction does not converge.

To demonstrate the use of the general polynomial solver we will take the

polynomial P(x) = x^5 - 1 which has the following roots,

The following program will find these roots.

#include <stdio.h>

#include <gsl/gsl_poly.h>

int

main (void)

{

int i;

/* coefficient of P(x) = -1 + x^5 */

double a[6] = { -1, 0, 0, 0, 0, 1 };

double z[10];

gsl_poly_complex_workspace * w

= gsl_poly_complex_workspace_alloc (6);

gsl_poly_complex_solve (a, 6, w, z);

gsl_poly_complex_workspace_free (w);

for (i = 0; i < 5; i++)

{

printf("z%d = %+.18f %+.18f\n",

i, z[2*i], z[2*i+1]);

}

return 0;

}

The output of the program is,

bash$ ./a.out

z0 = -0.809016994374947451 +0.587785252292473137

z1 = -0.809016994374947451 -0.587785252292473137

z2 = +0.309016994374947451 +0.951056516295153642

z3 = +0.309016994374947451 -0.951056516295153642

z4 = +1.000000000000000000 +0.000000000000000000

which agrees with the analytic result, z_n = \exp(2 \pi n i/5).

The balanced-QR method and its error analysis is described in the

following papers.

-

R.S. Martin, G. Peters and J.H. Wilkinson, "The QR Algorithm for Real

Hessenberg Matrices", Numerische Mathematik, 14 (1970), 219--231.

-

B.N. Parlett and C. Reinsch, "Balancing a Matrix for Calculation of

Eigenvalues and Eigenvectors", Numerische Mathematik, 13 (1969),

293--304.

-

A. Edelman and H. Murakami, "Polynomial roots from companion matrix

eigenvalues", Mathematics of Computation, Vol. 64 No. 210

(1995), 763--776.

This chapter describes the GSL special function library. The library

includes routines for calculating the values of Airy functions, Bessel

functions, Clausen functions, Coulomb wave functions, Coupling

coefficients, the Dawson function, Debye functions, Dilogarithms,

Elliptic integrals, Jacobi elliptic functions, Error functions,

Exponential integrals, Fermi-Dirac functions, Gamma functions,

Gegenbauer functions, Hypergeometric functions, Laguerre functions,

Legendre functions and Spherical Harmonics, the Psi (Digamma) Function,

Synchrotron functions, Transport functions, Trigonometric functions and

Zeta functions. Each routine also computes an estimate of the numerical

error in the calculated value of the function.

The functions are declared in individual header files, such as

`gsl_sf_airy.h', `gsl_sf_bessel.h', etc. The complete set of

header files can be included using the file `gsl_sf.h'.

The special functions are available in two calling conventions, a

natural form which returns the numerical value of the function and

an error-handling form which returns an error code. The two types

of function provide alternative ways of accessing the same underlying

code.

The natural form returns only the value of the function and can be

used directly in mathematical expressions.. For example, the following

function call will compute the value of the Bessel function

J_0(x),

double y = gsl_sf_bessel_J0 (x);

There is no way to access an error code or to estimate the error using

this method. To allow access to this information the alternative

error-handling form stores the value and error in a modifiable argument,

gsl_sf_result result;

int status = gsl_sf_bessel_J0_e (x, &result);

The error-handling functions have the suffix _e. The returned

status value indicates error conditions such as overflow, underflow or

loss of precision. If there are no errors the error-handling functions

return GSL_SUCCESS.

The error handling form of the special functions always calculate an

error estimate along with the value of the result. Therefore,

structures are provided for amalgamating a value and error estimate.

These structures are declared in the header file `gsl_sf_result.h'.

The gsl_sf_result struct contains value and error fields.

typedef struct

{

double val;

double err;

} gsl_sf_result;

The field val contains the value and the field err contains

an estimate of the absolute error in the value.

In some cases, an overflow or underflow can be detected and handled by a

function. In this case, it may be possible to return a scaling exponent

as well as an error/value pair in order to save the result from

exceeding the dynamic range of the built-in types. The

gsl_sf_result_e10 struct contains value and error fields as well

as an exponent field such that the actual result is obtained as

result * 10^(e10).

typedef struct

{

double val;

double err;

int e10;

} gsl_sf_result_e10;

The goal of the library is to achieve double precision accuracy wherever

possible. However the cost of evaluating some special functions to

double precision can be significant, particularly where very high order

terms are required. In these cases a mode argument allows the

accuracy of the function to be reduced in order to improve performance.

The following precision levels are available for the mode argument,

GSL_PREC_DOUBLE

-

Double-precision, a relative accuracy of approximately @c{$2 \times 10^{-16}$}

2 * 10^-16.

GSL_PREC_SINGLE

-

Single-precision, a relative accuracy of approximately @c{$1 \times 10^{-7}$}

10^-7.

GSL_PREC_APPROX

-

Approximate values, a relative accuracy of approximately @c{$5 \times 10^{-4}$}

5 * 10^-4.

The approximate mode provides the fastest evaluation at the lowest

accuracy.

The Airy functions Ai(x) and Bi(x) are defined by the

integral representations,

For further information see Abramowitz & Stegun, Section 10.4. The Airy

functions are defined in the header file `gsl_sf_airy.h'.

- Function: double gsl_sf_airy_Ai (double x, gsl_mode_t mode)

-

- Function: int gsl_sf_airy_Ai_e (double x, gsl_mode_t mode, gsl_sf_result * result)

-

These routines compute the Airy function Ai(x) with an accuracy

specified by mode.

- Function: double gsl_sf_airy_Bi (double x, gsl_mode_t mode)

-

- Function: int gsl_sf_airy_Bi_e (double x, gsl_mode_t mode, gsl_sf_result * result)

-

These routines compute the Airy function Bi(x) with an accuracy

specified by mode.

- Function: double gsl_sf_airy_Ai_scaled (double x, gsl_mode_t mode)

-

- Function: int gsl_sf_airy_Ai_scaled_e (double x, gsl_mode_t mode, gsl_sf_result * result)

-

These routines compute a scaled version of the Airy function

S_A(x) Ai(x). For x>0 the scaling factor S_A(x) is @c{$\exp(+(2/3) x^{3/2})$}

\exp(+(2/3) x^(3/2)),

and is 1

for x<0.

- Function: double gsl_sf_airy_Bi_scaled (double x, gsl_mode_t mode)

-

- Function: int gsl_sf_airy_Bi_scaled_e (double x, gsl_mode_t mode, gsl_sf_result * result)

-

These routines compute a scaled version of the Airy function

S_B(x) Bi(x). For x>0 the scaling factor S_B(x) is @c{$\exp(-(2/3) x^{3/2})$}

exp(-(2/3) x^(3/2)), and is 1 for x<0.

- Function: double gsl_sf_airy_Ai_deriv (double x, gsl_mode_t mode)

-

- Function: int gsl_sf_airy_Ai_deriv_e (double x, gsl_mode_t mode, gsl_sf_result * result)

-

These routines compute the Airy function derivative Ai'(x) with

an accuracy specified by mode.

- Function: double gsl_sf_airy_Bi_deriv (double x, gsl_mode_t mode)

-

- Function: int gsl_sf_airy_Bi_deriv_e (double x, gsl_mode_t mode, gsl_sf_result * result)

-

These routines compute the Airy function derivative Bi'(x) with

an accuracy specified by mode.

- Function: double gsl_sf_airy_Ai_deriv_scaled (double x, gsl_mode_t mode)

-

- Function: int gsl_sf_airy_Ai_deriv_scaled_e (double x, gsl_mode_t mode, gsl_sf_result * result)

-

These routines compute the derivative of the scaled Airy

function S_A(x) Ai(x).

- Function: double gsl_sf_airy_Bi_deriv_scaled (double x, gsl_mode_t mode)

-

- Function: int gsl_sf_airy_Bi_deriv_scaled_e (double x, gsl_mode_t mode, gsl_sf_result * result)

-

These routines compute the derivative of the scaled Airy function

S_B(x) Bi(x).

- Function: double gsl_sf_airy_zero_Ai (unsigned int s)

-

- Function: int gsl_sf_airy_zero_Ai_e (unsigned int s, gsl_sf_result * result)

-

These routines compute the location of the s-th zero of the Airy

function Ai(x).

- Function: double gsl_sf_airy_zero_Bi (unsigned int s)

-

- Function: int gsl_sf_airy_zero_Bi_e (unsigned int s, gsl_sf_result * result)

-

These routines compute the location of the s-th zero of the Airy

function Bi(x).

- Function: double gsl_sf_airy_zero_Ai_deriv (unsigned int s)

-

- Function: int gsl_sf_airy_zero_Ai_deriv_e (unsigned int s, gsl_sf_result * result)

-

These routines compute the location of the s-th zero of the Airy

function derivative Ai'(x).

- Function: double gsl_sf_airy_zero_Bi_deriv (unsigned int s)

-

- Function: int gsl_sf_airy_zero_Bi_deriv_e (unsigned int s, gsl_sf_result * result)

-

These routines compute the location of the s-th zero of the Airy

function derivative Bi'(x).

The routines described in this section compute the Cylindrical Bessel

functions J_n(x), Y_n(x), Modified cylindrical Bessel

functions I_n(x), K_n(x), Spherical Bessel functions

j_l(x), y_l(x), and Modified Spherical Bessel functions

i_l(x), k_l(x). For more information see Abramowitz & Stegun,

Chapters 9 and 10. The Bessel functions are defined in the header file

`gsl_sf_bessel.h'.

- Function: double gsl_sf_bessel_J0 (double x)

-

- Function: int gsl_sf_bessel_J0_e (double x, gsl_sf_result * result)

-

These routines compute the regular cylindrical Bessel function of zeroth

order, J_0(x).

- Function: double gsl_sf_bessel_J1 (double x)

-

- Function: int gsl_sf_bessel_J1_e (double x, gsl_sf_result * result)

-

These routines compute the regular cylindrical Bessel function of first

order, J_1(x).

- Function: double gsl_sf_bessel_Jn (int n, double x)

-

- Function: int gsl_sf_bessel_Jn_e (int n, double x, gsl_sf_result * result)

-

These routines compute the regular cylindrical Bessel function of

order n, J_n(x).

- Function: int gsl_sf_bessel_Jn_array (int nmin, int nmax, double x, double result_array[])

-

This routine computes the values of the regular cylindrical Bessel

functions J_n(x) for n from nmin to nmax

inclusive, storing the results in the array result_array. The

values are computed using recurrence relations, for efficiency, and

therefore may differ slightly from the exact values.

- Function: double gsl_sf_bessel_Y0 (double x)

-

- Function: int gsl_sf_bessel_Y0_e (double x, gsl_sf_result * result)

-

These routines compute the irregular cylindrical Bessel function of zeroth

order, Y_0(x), for x>0.

- Function: double gsl_sf_bessel_Y1 (double x)

-

- Function: int gsl_sf_bessel_Y1_e (double x, gsl_sf_result * result)

-

These routines compute the irregular cylindrical Bessel function of first

order, Y_1(x), for x>0.

- Function: double gsl_sf_bessel_Yn (int n,double x)

-

- Function: int gsl_sf_bessel_Yn_e (int n,double x, gsl_sf_result * result)

-

These routines compute the irregular cylindrical Bessel function of

order n, Y_n(x), for x>0.

- Function: int gsl_sf_bessel_Yn_array (int nmin, int nmax, double x, double result_array[])

-

This routine computes the values of the irregular cylindrical Bessel

functions Y_n(x) for n from nmin to nmax

inclusive, storing the results in the array result_array. The

domain of the function is x>0. The values are computed using

recurrence relations, for efficiency, and therefore may differ slightly

from the exact values.

- Function: double gsl_sf_bessel_I0 (double x)

-

- Function: int gsl_sf_bessel_I0_e (double x, gsl_sf_result * result)

-

These routines compute the regular modified cylindrical Bessel function

of zeroth order, I_0(x).

- Function: double gsl_sf_bessel_I1 (double x)

-

- Function: int gsl_sf_bessel_I1_e (double x, gsl_sf_result * result)

-

These routines compute the regular modified cylindrical Bessel function

of first order, I_1(x).

- Function: double gsl_sf_bessel_In (int n, double x)

-

- Function: int gsl_sf_bessel_In_e (int n, double x, gsl_sf_result * result)

-

These routines compute the regular modified cylindrical Bessel function

of order n, I_n(x).

- Function: int gsl_sf_bessel_In_array (int nmin, int nmax, double x, double result_array[])

-

This routine computes the values of the regular modified cylindrical

Bessel functions I_n(x) for n from nmin to

nmax inclusive, storing the results in the array

result_array. The start of the range nmin must be positive

or zero. The values are computed using recurrence relations, for

efficiency, and therefore may differ slightly from the exact values.

- Function: double gsl_sf_bessel_I0_scaled (double x)

-

- Function: int gsl_sf_bessel_I0_scaled_e (double x, gsl_sf_result * result)

-

These routines compute the scaled regular modified cylindrical Bessel

function of zeroth order \exp(-|x|) I_0(x).

- Function: double gsl_sf_bessel_I1_scaled (double x)

-

- Function: int gsl_sf_bessel_I1_scaled_e (double x, gsl_sf_result * result)

-

These routines compute the scaled regular modified cylindrical Bessel

function of first order \exp(-|x|) I_1(x).

- Function: double gsl_sf_bessel_In_scaled (int n, double x)

-

- Function: int gsl_sf_bessel_In_scaled_e (int n, double x, gsl_sf_result * result)

-

These routines compute the scaled regular modified cylindrical Bessel

function of order n, \exp(-|x|) I_n(x)

- Function: int gsl_sf_bessel_In_scaled_array (int nmin, int nmax, double x, double result_array[])

-

This routine computes the values of the scaled regular cylindrical

Bessel functions \exp(-|x|) I_n(x) for n from

nmin to nmax inclusive, storing the results in the array

result_array. The start of the range nmin must be positive

or zero. The values are computed using recurrence relations, for

efficiency, and therefore may differ slightly from the exact values.

- Function: double gsl_sf_bessel_K0 (double x)

-

- Function: int gsl_sf_bessel_K0_e (double x, gsl_sf_result * result)

-

These routines compute the irregular modified cylindrical Bessel

function of zeroth order, K_0(x), for x > 0.

- Function: double gsl_sf_bessel_K1 (double x)

-

- Function: int gsl_sf_bessel_K1_e (double x, gsl_sf_result * result)

-

These routines compute the irregular modified cylindrical Bessel

function of first order, K_1(x), for x > 0.

- Function: double gsl_sf_bessel_Kn (int n, double x)

-

- Function: int gsl_sf_bessel_Kn_e (int n, double x, gsl_sf_result * result)

-

These routines compute the irregular modified cylindrical Bessel

function of order n, K_n(x), for x > 0.

- Function: int gsl_sf_bessel_Kn_array (int nmin, int nmax, double x, double result_array[])

-

This routine computes the values of the irregular modified cylindrical

Bessel functions K_n(x) for n from nmin to

nmax inclusive, storing the results in the array

result_array. The start of the range nmin must be positive

or zero. The domain of the function is x>0. The values are

computed using recurrence relations, for efficiency, and therefore

may differ slightly from the exact values.

- Function: double gsl_sf_bessel_K0_scaled (double x)

-

- Function: int gsl_sf_bessel_K0_scaled_e (double x, gsl_sf_result * result)

-

These routines compute the scaled irregular modified cylindrical Bessel

function of zeroth order \exp(x) K_0(x) for x>0.

- Function: double gsl_sf_bessel_K1_scaled (double x)

-

- Function: int gsl_sf_bessel_K1_scaled_e (double x, gsl_sf_result * result)

-

These routines compute the scaled irregular modified cylindrical Bessel

function of first order \exp(x) K_1(x) for x>0.

- Function: double gsl_sf_bessel_Kn_scaled (int n, double x)

-

- Function: int gsl_sf_bessel_Kn_scaled_e (int n, double x, gsl_sf_result * result)

-

These routines compute the scaled irregular modified cylindrical Bessel

function of order n, \exp(x) K_n(x), for x>0.

- Function: int gsl_sf_bessel_Kn_scaled_array (int nmin, int nmax, double x, double result_array[])

-

This routine computes the values of the scaled irregular cylindrical

Bessel functions \exp(x) K_n(x) for n from nmin to

nmax inclusive, storing the results in the array

result_array. The start of the range nmin must be positive

or zero. The domain of the function is x>0. The values are

computed using recurrence relations, for efficiency, and therefore

may differ slightly from the exact values.

- Function: double gsl_sf_bessel_j0 (double x)

-

- Function: int gsl_sf_bessel_j0_e (double x, gsl_sf_result * result)

-

These routines compute the regular spherical Bessel function of zeroth

order, j_0(x) = \sin(x)/x.

- Function: double gsl_sf_bessel_j1 (double x)

-